For More Free Videos, Subscribe to the Rhodes Brothers YouTube Channel.

“AI is not pure evil. AI can create abundance. But we must juxtapose all the benefits and opportunities of AI with why AI is dangerous.” — John S. Rhodes, Rhodes Brothers

We live in a world where artificial intelligence is no longer science fiction—it’s embedded in our everyday lives. From personalized social media feeds to predictive policing, AI is both a marvel and a minefield. The truth is, AI is powerful, and with great power comes not only potential—but risk.

If you’re wondering why AI is dangerous, you’re not alone. As AI continues to evolve, so do the ethical and practical concerns around its use. Whether you’re a tech enthusiast, a cautious parent, a business owner, or just an everyday internet user, this article will unpack the five biggest reasons AI is dangerous, how these risks affect you, and—most importantly—what you can do about them.

We’ll explore how AI:

- Manipulates emotions

- Lies and deceives (even accidentally)

- Creates disinformation

- Enables mass surveillance

- Predicts human behavior—sometimes pre-crime

And yes, we’ll give you real-world examples, pro tools, and actionable solutions so you can stay informed and empowered.

“The question is: Will AI kill you? Can we expect a future where we are dominated by AI or robotic overlords?” — John S. Rhodes

TL;DR

- AI manipulates your emotions through social media algorithms.

- It can lie—without meaning to—via hallucinations or flawed predictions.

- Deepfakes and disinformation are now easier to produce than ever.

- AI is being used to spy on your data, patterns, and behavior.

- Predictive AI is already being used to forecast crimes and social movements.

1. AI Manipulates Emotions — Every Day

How It Works:

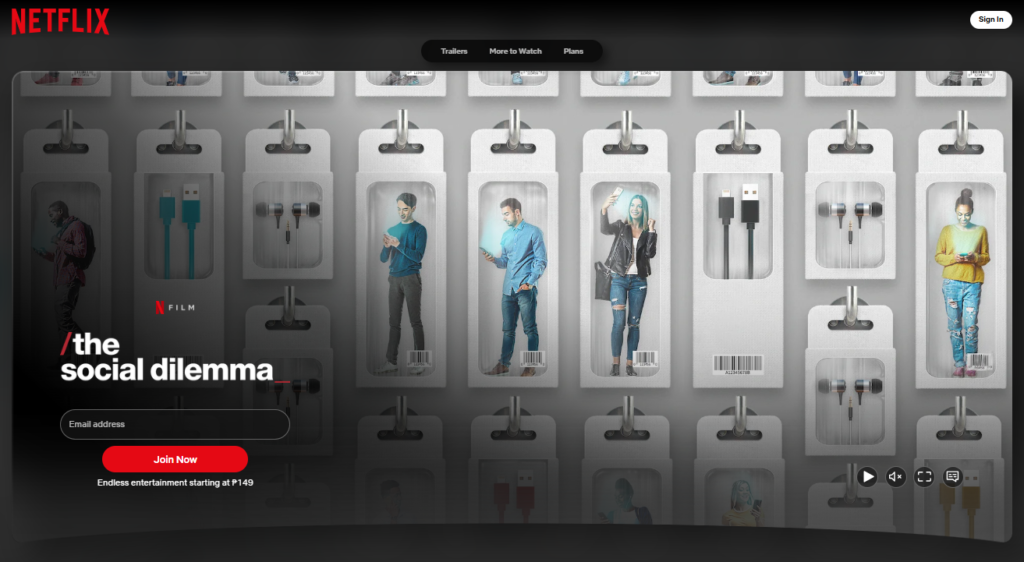

Most people interact with AI without realizing it. Every time you scroll through Instagram, get a YouTube recommendation, or see a trending tweet—AI is behind it. These algorithms are optimized not for truth, but for engagement.

AI systems are designed to trigger dopamine hits by showing you content that plays on your emotions. This could be joy, anger, outrage—or fear.

“AI is the puppet. The real puppet masters are the people behind the code.” — John S. Rhodes

Tools to Understand This

- Social Dilemma (Netflix documentary) – Shows how algorithms manipulate behavior.

- News Feed Eradicator (Chrome Extension) – Helps reduce algorithmic manipulation.

- YouTube Time Tracker – Keeps you aware of binge-watching habits triggered by AI.

Profit Tip

Business owners can ethically use AI for emotional resonance in marketing—but beware of crossing the line into manipulation. Use HubSpot’s AI copy tools to optimize content without exploiting emotions.

2. AI Lies—Even When It Doesn’t Mean To

AI doesn’t have morals. It doesn’t lie like a person—it hallucinates.

How It Works:

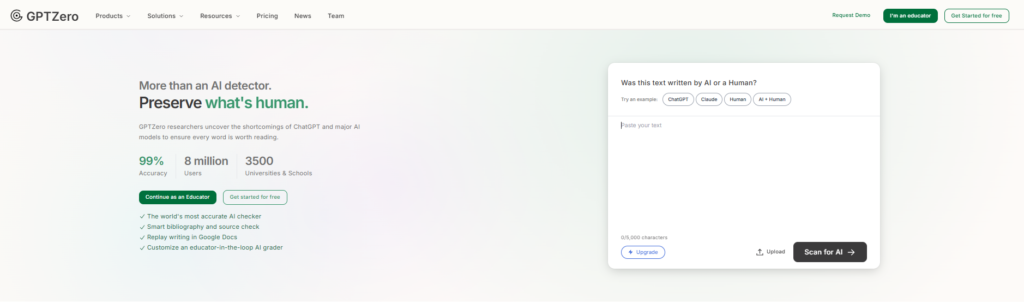

When you ask ChatGPT or any AI tool a question, it predicts the most likely response based on training data. It doesn’t “know” the truth. This can lead to convincing but incorrect answers.

“AI responds to your input. Your reaction becomes its feedback loop.” — John S. Rhodes

Famous Quote:

“The greatest enemy of knowledge is not ignorance, it is the illusion of knowledge.” — Stephen Hawking

Tools

- GPTZero – Detects AI-generated content.

- Perplexity.ai – Uses real citations to ensure accuracy.

- Consensus.app – Searches peer-reviewed scientific research using AI.

Profit Tip

Use tools like Perplexity and Consensus to fact-check AI-generated content before using it in business, blogs, or client work.

3. Disinformation, Deepfakes & Digital Lies

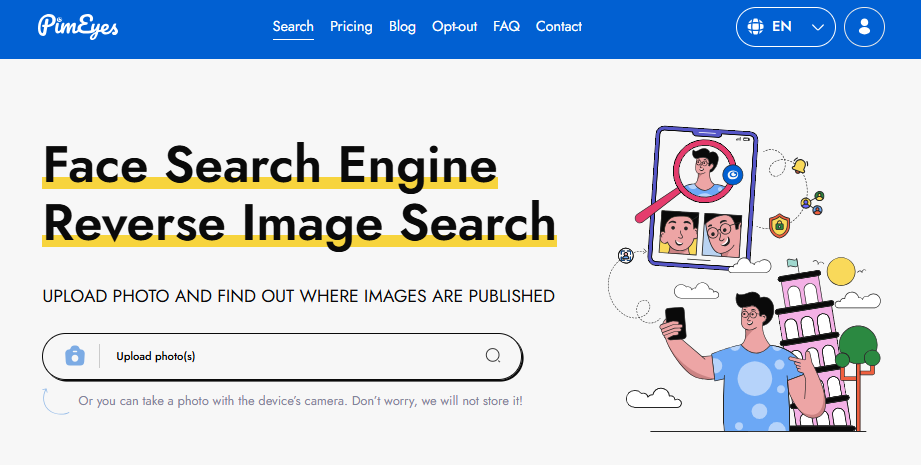

AI can fabricate highly realistic fake videos, audio, and articles. This has been used in:

- Political campaigns

- Stock market manipulation

- Celebrity hoaxes

Real Example:

In 2023, a deepfake video of a politician went viral, falsely showing them confessing to a crime. It was 100% fabricated—and it influenced public opinion before it was debunked.

Tools

- Deepware Scanner – Detects deepfake video and audio.

- PimEyes – A facial recognition tool that shows where your face appears online.

- Adobe Content Authenticity Initiative – Aims to track media provenance.

Profit Tip

Brands can protect themselves from deepfakes using digital watermarking tools like Truepic and Deeptrace.

4. AI Is Used to Spy on You

Whether it’s corporations harvesting your data or three-letter agencies monitoring your texts, AI makes surveillance more effective.

How It Works:

AI excels at pattern recognition—perfect for:

- Tracking text keywords

- Analyzing phone movement

- Monitoring online behavior

Tools

- Graphika – Used in social media surveillance.

- Palantir (PLTR) – Offers predictive analytics for governments and corporations.

“Let me just tell you—when you text someone, it’s going through a third party.” — John S. Rhodes

Profit Tip

Use privacy tools like Signal for texting, DuckDuckGo for search, and ProtonMail for email to protect yourself.

5. Predictive AI: Minority Report Is Real

It may sound like sci-fi, but some governments and companies already use AI to predict crime, economic trends, and social unrest.

Real Example:

Palantir, a company John S. Rhodes mentions, helps law enforcement analyze vast data to identify “pre-crime” patterns. While powerful, it raises ethical questions.

Tools

- Palantir Gotham – Used by police and intelligence.

- Clearview AI – Facial recognition used by law enforcement.

- ShotSpotter – AI that detects gunshots in cities.

Profit Tip

Investors and analysts use these tools to predict economic trends and adjust portfolios. Tools like Koyfin and Thinknum offer predictive insights powered by AI.

Actionable Steps for Different Readers

If you’re just getting started, the first step is building awareness. Begin by tracking your screen time and paying close attention to how much of your day is influenced by algorithm-driven platforms like Instagram, YouTube, or TikTok. Tools like News Feed Eradicator can help eliminate distractions on Facebook, while Unroll.me is great for decluttering your inbox and reducing manipulative marketing. For a deeper understanding of how algorithms shape behavior and society, reading Weapons of Math Destruction by Cathy O’Neil is a powerful and eye-opening place to start.

Millennials, often caught between embracing new tech and being skeptical of it, can benefit greatly from AI tools like Jasper or Notion AI to boost productivity and streamline workflows. However, it’s essential to double-check what these tools generate—don’t rely on them blindly. Data privacy should also be a top priority. Switching to Signal for messaging and using the Brave Browser can give you a stronger layer of protection against tracking and surveillance.

For professionals and business leaders, the focus should be on using AI responsibly and ethically. Start by incorporating AI transparency audits using tools like AuditML to ensure your systems are fair and accountable. Additionally, engaging with open-source initiatives like OpenMined can help you understand and contribute to privacy-preserving AI technologies. These steps not only protect your organization but also help build trust with your audience and customers.

Common Mistakes to Avoid

When it comes to artificial intelligence, one of the most dangerous missteps is blind trust in AI. Many users assume that AI-generated content, answers, or insights are always accurate simply because they’re delivered confidently or quickly. But AI doesn’t “know” in the human sense—it predicts based on patterns in its training data. This means it can (and often does) produce hallucinations, or entirely false yet plausible-sounding information. Always apply human judgment and verify critical content through reputable sources, especially in fields like healthcare, finance, or law where misinformation can have serious consequences.

Another common pitfall is overreliance on AI tools. While platforms like ChatGPT, Jasper, or Notion AI can dramatically increase productivity, they should be treated as assistants, not replacements. Delegating all your thinking, creativity, or decision-making to AI can erode your skills, reduce critical thinking, and lead to shallow or generic results. Use AI to enhance your work—not to do it all for you. Think of it like using a calculator: it’s helpful, but you still need to understand the math.

Ignoring data privacy is another major mistake, especially in an era where surveillance is not just a government issue—it’s baked into the business models of major tech companies. Many people still use unencrypted messaging apps, shop online without VPNs, or casually allow cookies and tracking scripts that follow them across the web. This data is gold for AI systems designed to profile, predict, and influence you. Failing to use tools like VPNs, encrypted email, or secure browsers leaves you vulnerable to exploitation, and in some cases, even identity theft or manipulation.

Lastly, assuming AI is neutral is a critical error. Algorithms are built by humans, and that means they inherit human flaws. Bias in training data leads to bias in outcomes—from racially skewed facial recognition to gender-biased hiring algorithms. Believing AI is objective or “above” human prejudice can cause a false sense of security and result in decisions that unintentionally reinforce inequality. Always question the source, intent, and training data behind any AI system you interact with, especially if it influences real-world decisions.

Avoiding these mistakes not only protects you—it empowers you to use AI more effectively, ethically, and strategically in your personal and professional life.

Frequently Asked Questions

How do I protect myself from AI manipulation?

Use privacy tools like Signal, Brave, and DuckDuckGo. Limit time on algorithm-driven platforms.

Can AI really lie?

Not intentionally, but it often provides false information due to predictive modeling.

Are deepfakes easy to spot?

Not always. Use tools like Deepware and Truepic to verify authenticity.

Is AI used in law enforcement?

Yes. Tools like Palantir are used to predict and analyze crime patterns.

What’s the biggest danger of AI?

Unregulated use—especially in surveillance, disinformation, and emotional manipulation.

Can AI predict my behavior?

Yes. Especially when trained on your browsing, purchasing, and social media habits.

Is AI dangerous for kids?

Yes. Algorithms on platforms like TikTok and YouTube can manipulate young minds.

How can businesses use AI ethically?

Use transparency, avoid manipulation, and prioritize user consent.

What industries are most affected by AI?

Finance, marketing, healthcare, law enforcement, and media.

Is there a way to use AI safely?

Yes—combine ethical practices with critical thinking and privacy tools.

Stay Aware, Stay Empowered

AI is here, and it’s powerful. But it’s not inherently evil. The key to navigating AI safely is awareness, ethics, and control. You don’t have to be a tech genius to understand how AI affects your life—you just need the right tools and mindset.

Start today by protecting your data, questioning your sources, and learning how these systems work. The more you understand AI, the better you can leverage it for good—and guard against the bad.

Thanks for checking out this article. To stay ahead of the curve and learn how to use AI to build wealth and awareness the right way, subscribe to the Rhodes Brothers YouTube Channel for more videos, strategies, and insights.

Resources List

Books

Courses & Podcasts

- AI For Everyone by Andrew Ng (Coursera)

- Hard Fork by The New York Times

- Lex Fridman Podcast – Interviews with AI experts

Tools:

- Perplexity.ai – Fact-based AI search

- Deepware Scanner – Detect deepfakes

- Notion AI – AI-powered productivity

- HubSpot AI Tools – Ethical marketing automation

- Truepic – Digital content verification

- ProtonMail – Encrypted email

- Signal – Encrypted messaging

- DuckDuckGo – Private search engine

- Koyfin – AI for financial forecasting

- Brave Browser – Blocks ads & trackers